Table of Contents

Introduction

The compliance team is drowning in alerts. Risk models are flagging false positives. And the auditors are asking questions about AI systems deployed two years ago, but you can’t fully explain them today. Sound familiar?

AI has quietly become the backbone of risk and compliance operations across BFSI. But here’s the uncomfortable truth: most institutions are using AI without the governance frameworks needed to manage it responsibly.

From Rule Books to Risk Intelligence

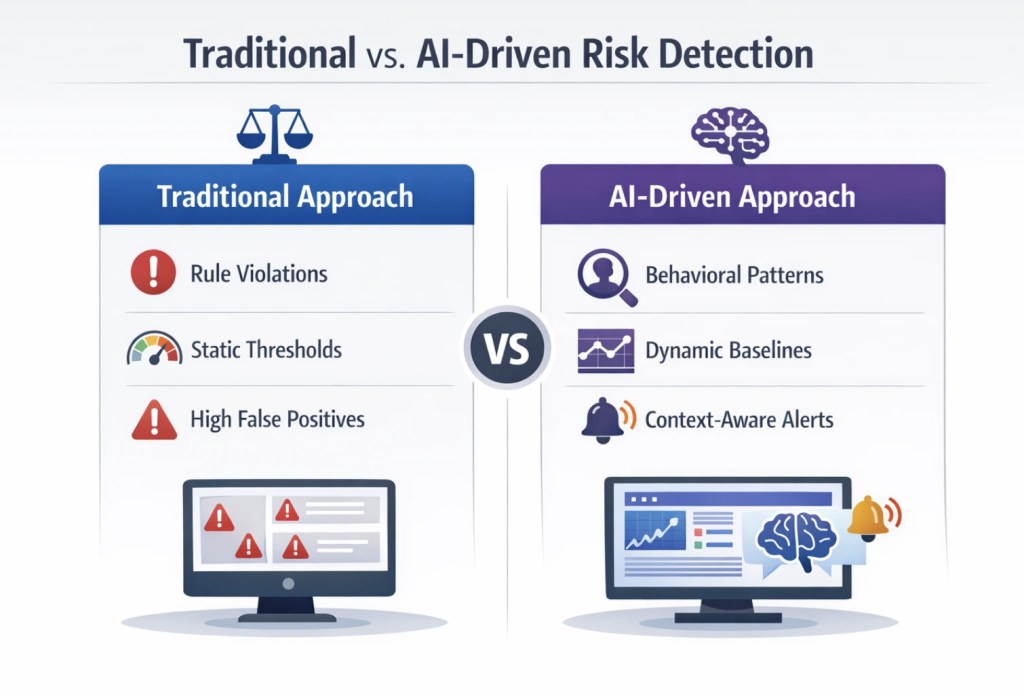

Remember when compliance was simple? If the transaction amount > ₹10 lakhs, flag it. If a customer visits three branches in one day, alert. These rigid rules worked when financial crime was predictable. It’s not anymore.

Today’s fraudsters don’t trip rule-based systems. They test boundaries, adapt quickly, and exploit the gaps between your rules. That’s why smart institutions are shifting to AI-powered risk intelligence that learns, adapts, and thinks contextually.

But this shift isn’t just about better technology. It’s about fundamentally rethinking how you manage risk and what governance looks like when machines make decisions.

What AI Is Actually Doing in Your Risk Operations

Let’s get specific about where AI is making a real difference:

Anomaly Detection That Actually Works

Traditional systems flag outliers based on static thresholds. AI models understand what “normal” looks like for each customer and can spot subtle deviations that signal real risk. A ₹50,000 transaction might be routine for one customer and highly suspicious for another. AI gets that nuance.

Behavioural Risk

Scoring Instead of scoring risk at the transaction level, AI builds comprehensive behavioural profiles. It considers transaction patterns, device usage, location data, timing, and dozens of other signals to assign real-time risk scores. This means fewer false alarms and faster detection of genuine threats.

Predictive Compliance

Here’s where it gets interesting. AI doesn’t just detect problems, it predicts them. Which customers are likely to default? Which transactions might be part of a money laundering scheme? Which operational processes are prone to compliance breaches? Predictive compliance lets you intervene before problems escalate. That’s a game-changer for institutions tired of always being reactive.

Join Our Newsletter

Get exclusive insights on banking, fintech, regulatory updates and industry trends delivered to your inbox.

The Governance Challenge Nobody Talks About

Here’s the problem: AI models are powerful precisely because they’re complex. They find patterns humans miss. But that complexity creates a governance nightmare.

The Black Box Problem

Your AI model rejected a loan application. The customer wants to know why. Your team wants to know why. Your regulator definitely wants to know why. But can you actually explain how the model reached that decision?

If the answer is “not really,” you have a serious governance gap.

Managing Bias in Risk Models

AI models learn from historical data. If your historical lending data reflects past biases, and let’s be honest, it probably does. The AI model will perpetuate those biases or maybe even amplify them.

Progressive institutions are implementing bias testing as a core part of model governance. They’re actively monitoring outcomes across customer segments, testing for adverse impact, and retraining models when bias is detected.

This isn’t just about fairness; it’s about regulatory risk. Regulators globally are scrutinising AI bias, and India isn’t going to be far behind.

Building Human Oversight That Actually Works

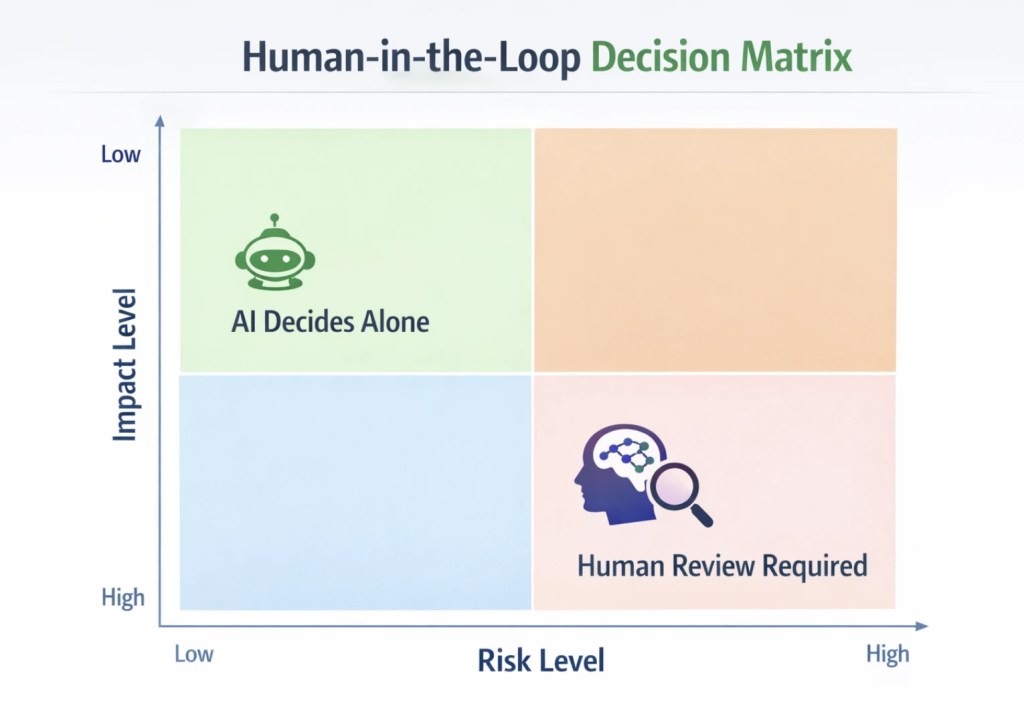

AI should augment human judgment, not replace it. Letting AI make decisions without human oversight can be detrimental. The fix isn’t adding more checkpoints. It’s designing smart oversight frameworks:

Build Exception Rules, Not Review Rules

Flip the logic. Instead of deciding what needs review, define what doesn’t. AI handles everything except: transactions above ₹10 lakhs, decisions affecting customer access, first-time high-risk customer interactions, and anything flagged with multiple anomaly signals. This keeps 95% of decisions automated while ensuring humans catch the 5% that actually matter. It’s faster to define the exceptions than to review everything.

Continuous Model Monitoring

AI models degrade over time. What worked last year might not work today because customer behaviour evolves, fraud patterns shift, and market conditions change.

In 2020, if a 65-year-old customer suddenly started making frequent UPI payments late at night, your risk model would rightly flag it as suspicious. Fast forward to 2026, senior citizens are among the fastest-growing UPI user segments. That same behavior is now completely normal. Your model needs to learn this shift, or it becomes a liability instead of an asset.

This would require your governance framework to have continuous monitoring protocols: tracking model performance, detecting changes, validating assumptions, and retraining the model when needed. This should be as routine as reconciling your books at month-end.

Clear Accountability Lines

When an AI model makes a mistake, who’s responsible? The data scientist who built it? The business team that deployed it? The compliance team that approved it?

Ambiguity here is dangerous. Establish clear ownership: who builds models, who validates them, who monitors them, and who’s accountable for their outcomes. Document it, communicate it, and enforce it.

Making AI Governance Practical

This all sounds good in theory. But how do you actually operationalise it?

To operationalise AI governance, teams should focus on these steps:

- Start by cataloguing every AI model in production. Document what each model does, what data it uses, who owns it, and what decisions it influences. It will be surprising how many institutions can’t answer these basic questions.

- Next, to establish a model governance committee with cross-functional representation of data science, risk, compliance, legal, and business. Giving it real authority to approve, reject, or require changes to AI deployments.

- Invest in explainability tools. The technology exists to make black-box models more transparent and can help teams to understand and communicate model decisions.

- Build bias testing into the standard model validation process and do not wait for problems to surface. Proactively test for disparate outcomes across customer segments, geographic regions, and demographic groups.

AI isn’t coming to risk and compliance. It’s already here, running in production, making decisions that affect your customers and your balance sheet every single day.

The real question isn’t whether to use AI. It’s whether you’re governing it properly or just hoping nothing goes wrong. Institutions that build strong governance frameworks now will operate with confidence. They’ll catch real fraud faster, waste less time on false alerts, and sleep better knowing they can explain every decision to regulators and customers alike.

Your AI models won’t wait for you to figure out governance. They’re learning, adapting, and making decisions right now. The only choice you have is whether those decisions happen inside a robust framework or in a governance vacuum.